Analytics Architecture Framework for Artificial Intelligence Algorithms

Analytics is the new email. Just like email, analytics has to be pervasive, reliably available, real-time, and has to just work. And this needs an architecture to establish an analytics organization that adapt and respond quickly to the needs changing business needs especially new era of artificial intelligence. At Kvantum, we have built an analytics architecture to establish a robust analytics capability that keeps pace with an organization's decision making needs.

Through this article, we propose the "Analytics Architecture" for an organization to collect, organize and utilize all the information generated from various organizational functions. Specifically, this article focuses on examples from "marketing" function to illustrate different aspects of the Analytics Architecture but it can be applied to any organization function including sales, manufacturing, sustainability, supply chain, procurement, finance, human resource, corporate development, etc.

This document is intended for business & technology leaders, in any organization, who are building analytics solutions and capabilities. It covers our views on the architectural terminology, concepts, and definitions needed to keep pace with the rapid evolution of AI & Machine learning technologies.

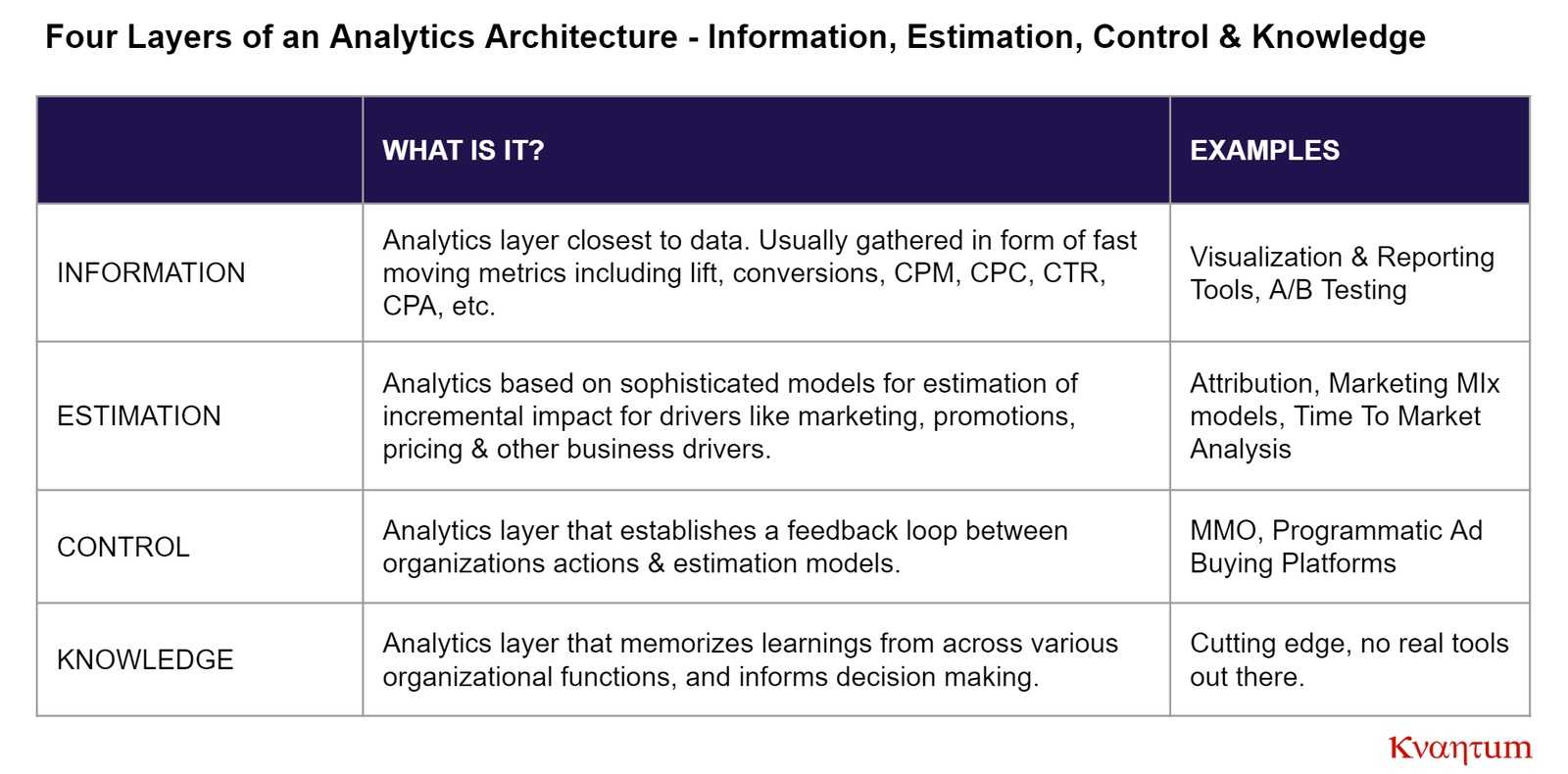

There are four layers to any Analytics Architecture - Information, Estimation, Control and Knowledge. The table below introduces these concepts by leveraging examples from marketing analytics domain.

Exhibit 1

A. INFORMATION LAYER

An organization generates data from various organizational functions. The first pillar of an analytics architecture is the ability to gather information from the data being generated by these organizational functions. Of these, Marketing & Sales function is especially a key to generating an always-on insight into consumer touch-points that drive the incremental sales impact.

There are many examples of companies who have successfully established capabilities to harness data and information. However these capabilities are mostly predicated on building a central data warehouse or a data lake of some form to store, organize and retrieve this data. While having a centralized data lake is essential, the resulting analytics proceeds only at the pace of data cleanup & harmonization. Such analytics is unable to keep pace with change in data & business environment. This has ramifications for not only timely decision making, but also the analytics culture. We have seen many examples of companies stifling analytics based innovations simply because it takes too much work to adapt the underlying data infrastructure & technology stack.

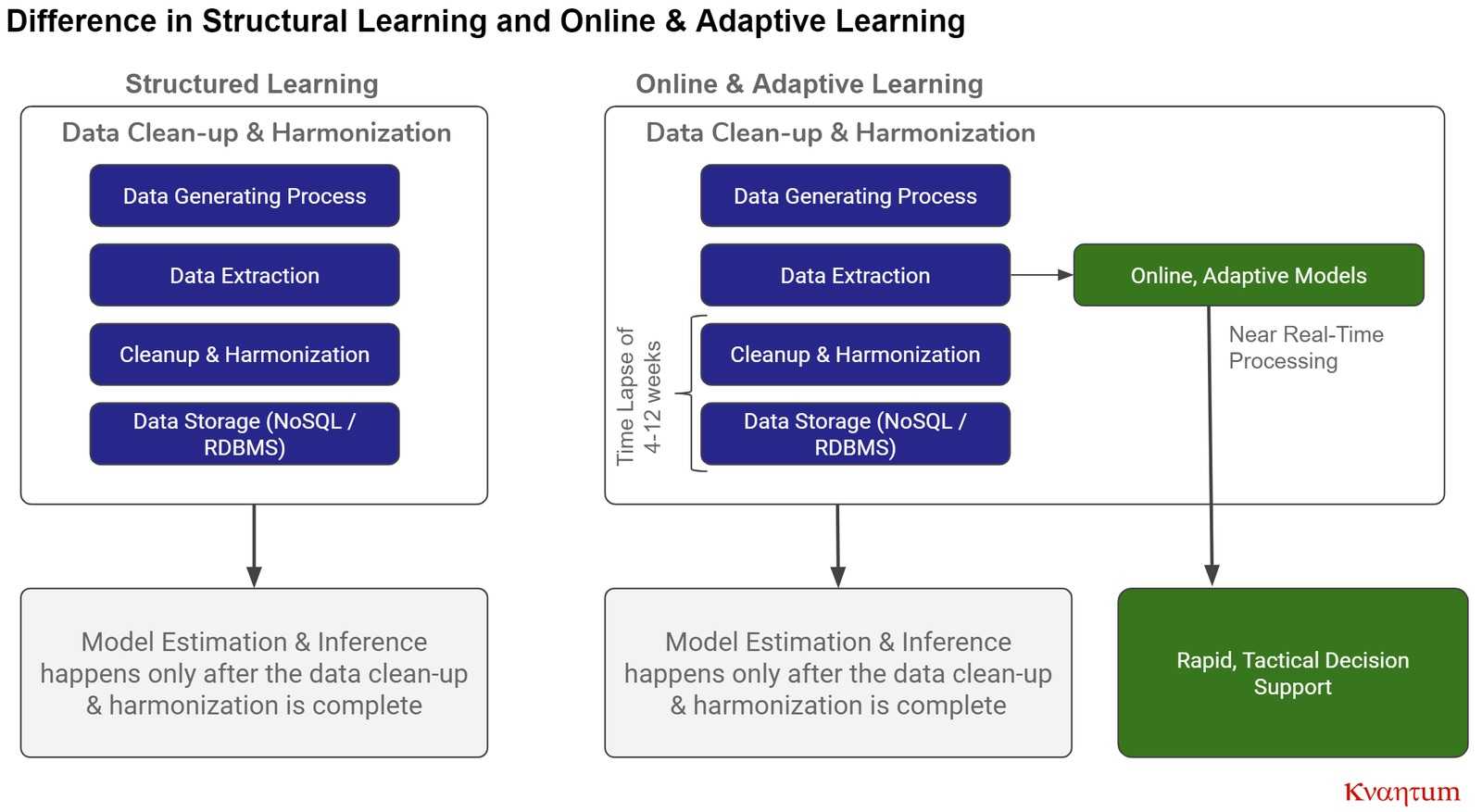

The biggest challenge with the current state of data collection is that organizations collect data, but do not turn it into information. Dashboards & reporting tools go a long way in making data accessible, but how to turn every single piece of data into a consistent action is the next evolutionary step in this analytics journey. One way to address this challenge is to establish a two step approach to processing data streams:

- Structured clean-up and harmonization of data.

- Online & adaptive processing of data.

While the structured clean-up & harmonization of data is well explored & leveraged in the world of big data analytics; online & adaptive processing of data is where the opportunity exists. Online & adaptive processing of data implies the continuous learning of data properties, as the data gets generated. This process learns data correlations, distributions & a myriad set of properties that describe the data. This information learnt from data evolves in real-time as the nature of data changes.

There are a variety of tools/techniques that are being used successfully for this type of analysis including wavelet decomposition, variational auto-encoders, Markov chains, Bayesian networks & generative adversarial networks. We will provide a detailed exposition of the real, practical role of these tools and many more in a subsequent white paper. However, it suffices to say that there are many practical, actionable tools that enable learning about the data at the pace of data generation. This learning is then converted into information on a real time basis.

Exhibit 2

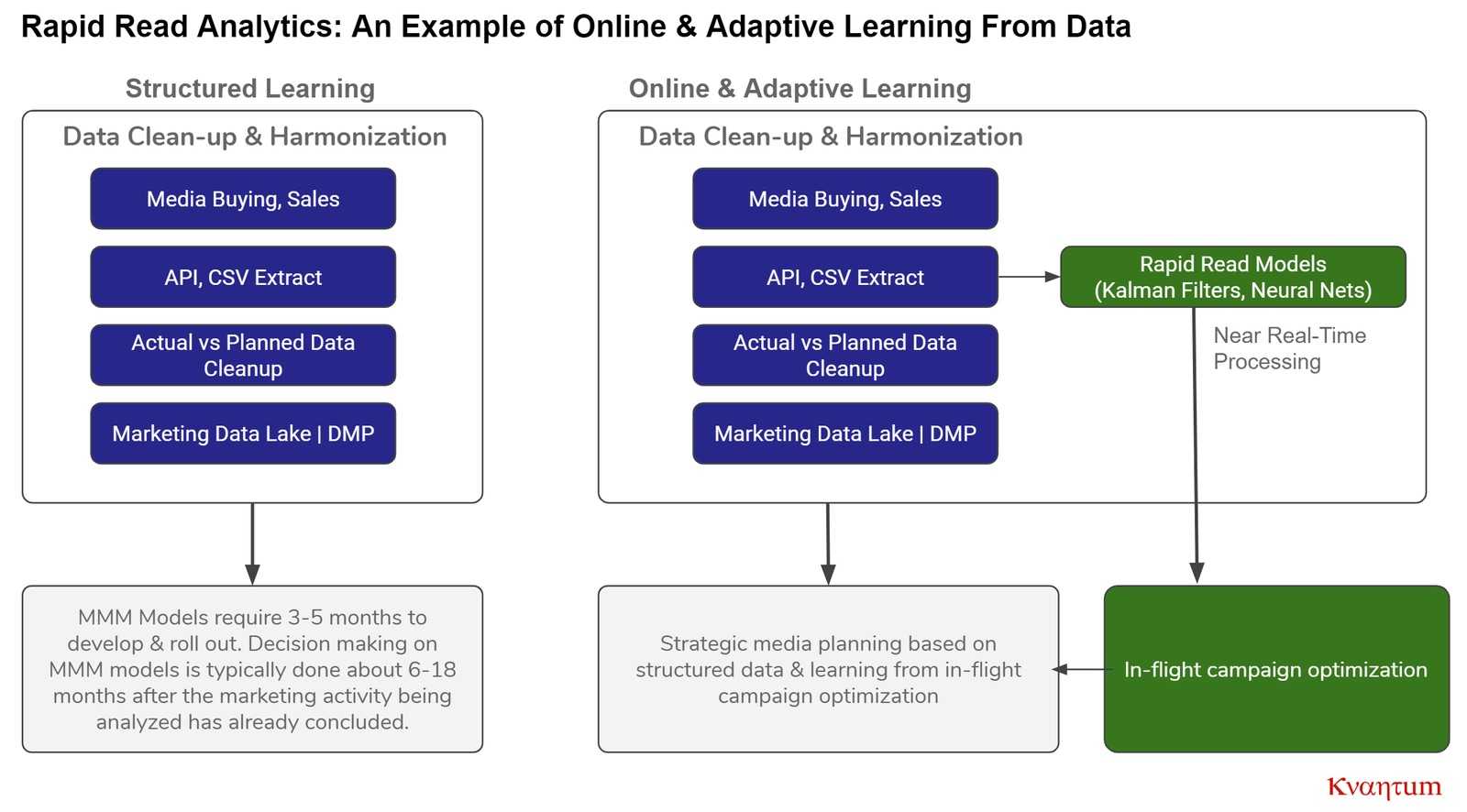

One example of online & adaptive approach of learning is that of in-flight optimization of marketing campaigns. Using only structured learning, marketing performance models typically take 6 months - 18 months to estimate marketing performance. By that time, most of the insights from such analysis hold only strategic and macro-level planning value. By combining online/adaptive learning with structured learning models, it is possible to estimate marketing performance for each marketing tactic in near real time. Through this insight, a brand can determine tactic level spend, target audience segments and ad frequency required to drive the highest possible incremental sales impact.

Exhibit 3

B. ESTIMATION LAYER

While most organizations run on information gathered from data, the truly advanced organizations rely on being able to estimate the impact of various actions and external factors on business outcomes. This estimation exists in form of different models used for various organizational functions. In marketing, specifically, these models exist in form of marketing mix models, attributions, brand equity, sales forecast, CLTV & customer acquisition models. Additionally, there are models that estimate the impact of pricing changes, sampling, coupons, merchandising & trade promotions.

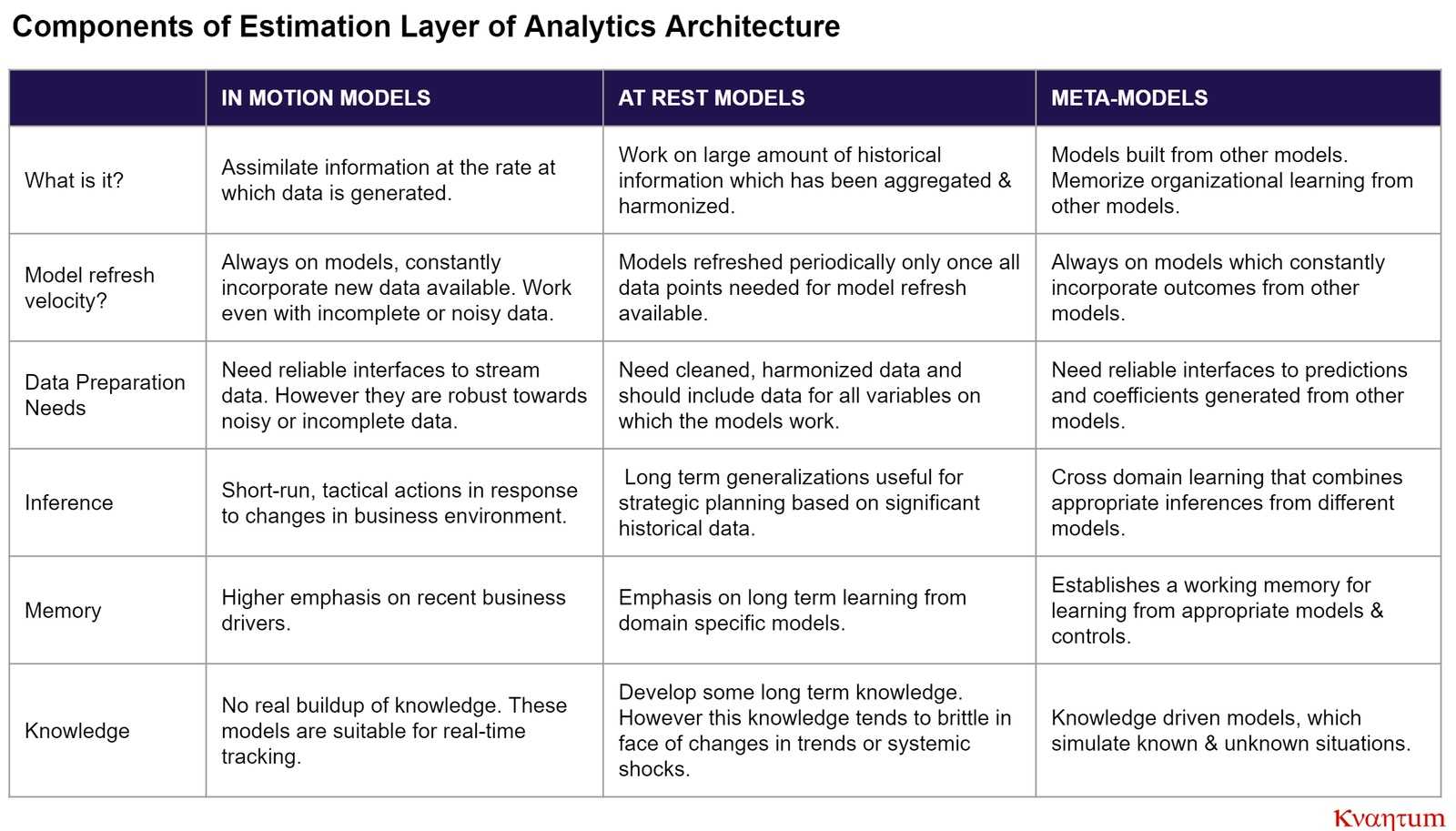

However, very rarely do we find an analytics framework that also looks at these models from the perspective of actionability. An organization's estimation capability needs to support three types of models: In-motion models, At-rest models, and Meta models.

A. In-motion Models

These models assimilate data & information at the rate at which data is being generated. This may be real-time, daily, weekly or even monthly. However the crucial characteristic of these models is that they consume data as it happens. For a model to do that, the model should be able to filter the noise inherent in short-run data series from the underlying information, account for possible errors & omissions in the data, and provide a reliable actionable outcome.

This outcome should be granular and actionable, and be available to the executor of a business action i.e.if this is being**“vetted”**by an intermediary human analytics layer before the information is used, then it is stale information.

B. At-Rest Models

These models are best used for long term planning & strategizing, not necessarily short-term immediate action. These models work on large amounts of historical(butrelevant) information, and are used to provide broad strokes guidance for future action. Given that strategic emphasis of these models, it is imperative these models have long term memory, and are using a sanitized, reliable version of the data, for ex. marketing mix models.

We also usually find that the marketing analytics organization sometimes blends in-motion & at-rest models, but they also discern the purpose of both models well, when considering actionable outcomes.

C. Meta Models

These models are built on top of other models. These models serve as a sort of an executive memory for how the organization assimilates learning from models for different business functions to establish a consistent view of business. For example these models may combine marketing mix models, pricing studies, segmentation outcomes & inventory flow to establish a consistent view of different business drivers that drive sales, and also provides the likely impact when any of the business levers are used to drive growth.

Exhibit 4

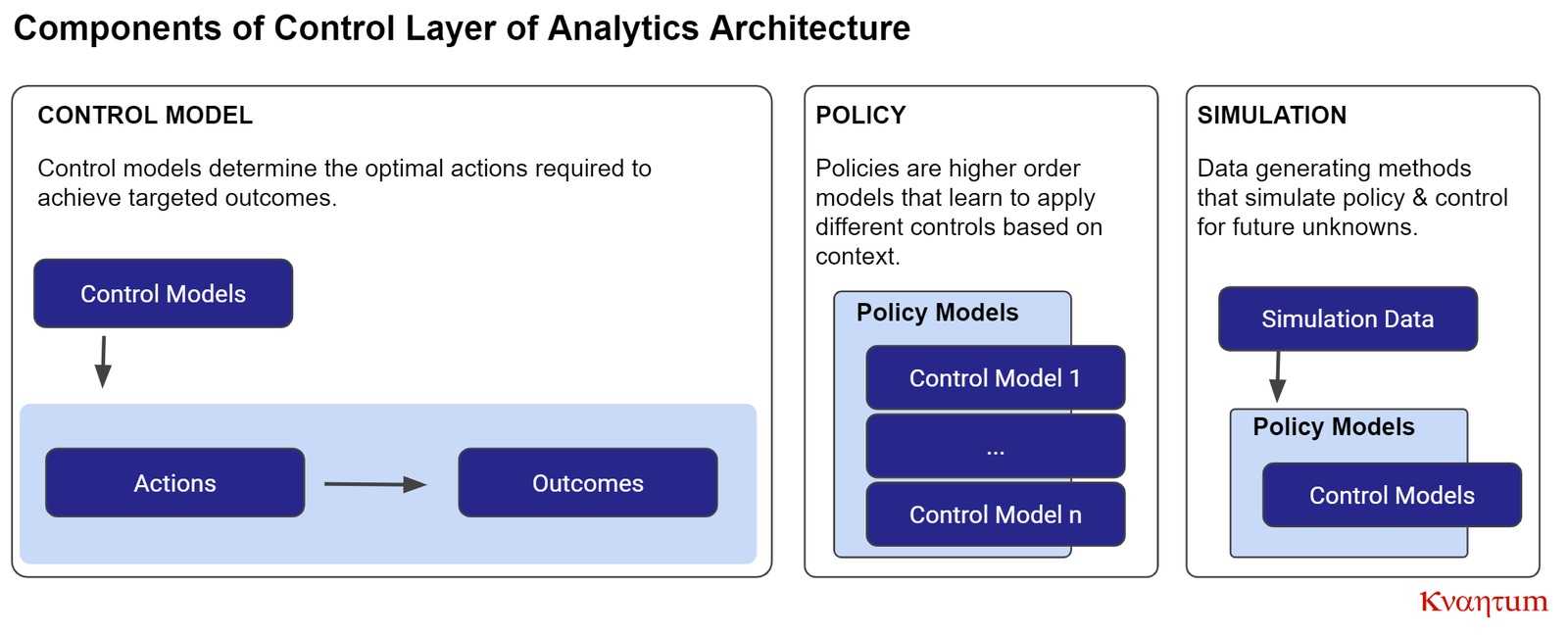

C. CONTROL LAYER

Actionability that is timely & adapts to the marketing environment requires the concept of control. It is not enough to understand the drivers of marketing performance, but also to establish the right set of changes that should be done to further optimize the desired business outcomes.

Setting up the right algorithmic controls & associated processes helps establish actionability as well as enables an organization to track the efficacy of various actions. Control based analytics can be further divided into the following components - Control, Policy and Simulation.

Exhibit 5

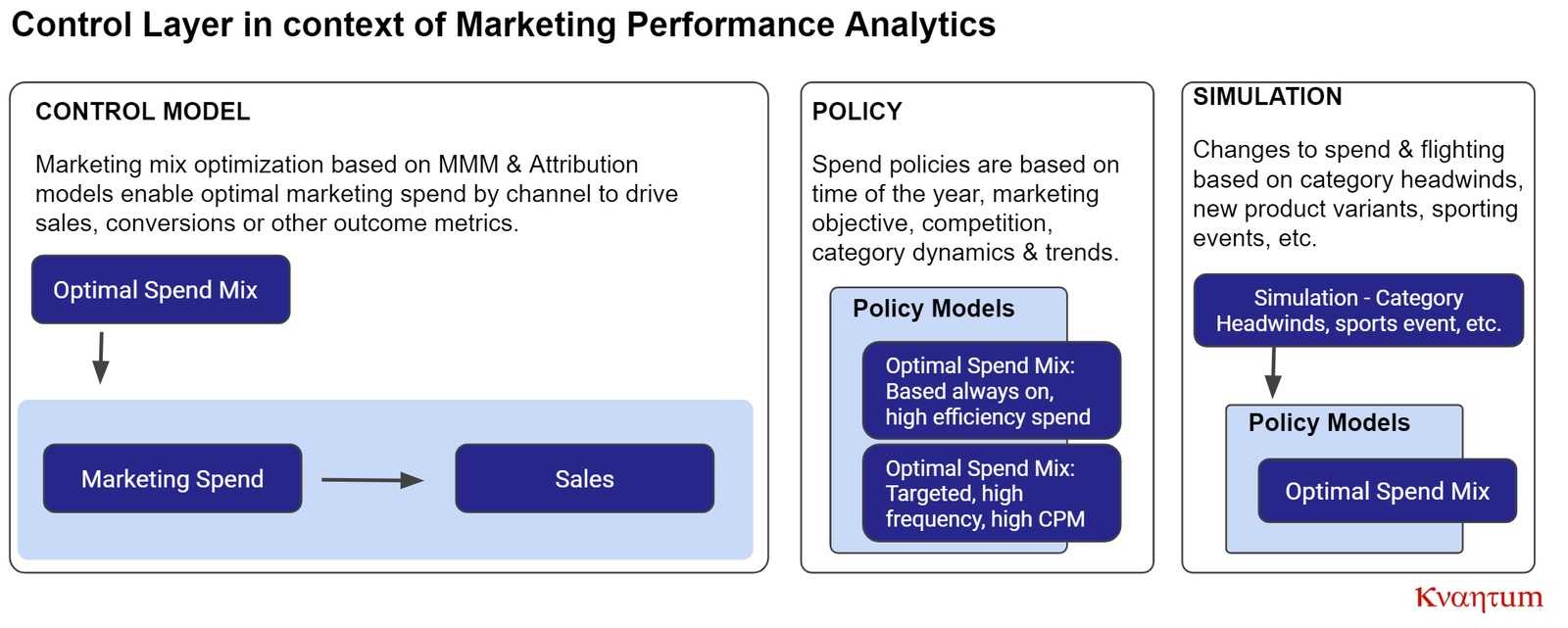

A. Control Model

Control Model is the first step for establishing actionability from an algorithmic outcome. For every model that is estimated, there has to be a corresponding action that can be used to control for outcomes.

An example of such control model, in a marketing performance context, would be to maximize the sales impact of marketing spend by changing the mix of marketing channels.To drive the maximum possible sales impact for marketing, there is an optimal level of marketing spend and an optimal set of marketing channels to drive the sales objective. If the role of the analytic process changes, from sales to customer acquisition, then the associated marketing mix would also need to adapt. Hence, the way a model is made actionable by an organization depends on the outcome that the organization is trying to control.

B. Policy

The concept of control is a bit simplistic as it is simply represents model specific variables that need to be controlled to drive an outcome. However in real life, establishing control requires the understanding of the life-cycle of control. Let’s use marketing optimization as an example to illustrate this further.

Assuming that the marketing mix or attribution modeling process recommends an increase in spend on programmatic display. The increase in spend brings multiple nuances with it. This includes determining the right bid metrics(CPMor CPC), bid levels, media metrics to optimize(viewability, CTR, CPA, etc.), weekly spend levels, dark periods, frequency & reach. There are multiple routes to get to the same outcome depending on the media and brand strategy.

- One approach would to be to target a narrowly defined audience at a high frequency, expecting a high conversion rate.

- Another approach can be to buy low CPM, high efficiency impressions with a very wide reach.

- Or it may be a combination of both depending on the campaign objective. If you are selling pizza and convincing a high value repeat buyer to remain with your brand, you are probably going to buy a higher CPM retargeting with an promotion geared towards conversion. However if you are targeting a deal seeker, who tends to switch brands, you are essentially casting a wider but cheaper net.

For an organization, it is imperative to codify various controls into appropriate policies that can then be applied on a model outcome depending on the advertising context. A class of AI algorithms based on reinforcement learning should be used to determine the right policy to apply to a specific control requirement, as well as do make policy adaptations as needed.

C. Simulation

The third key component of Control is simulation. Simulation enables learning about the unknown. Through the use of models & associated control algorithms, it is possible to evolve a reliable decision support system that prescribes the best action in case of known circumstances. So if the marketing or sales environment is changing iteratively, then these models can help track and predict the best way to react to change. However, if the environment changes in a way which the business has never seen in the past, then the simulation aspect of the control is required.

For instance, let’s take the automobiles industry. For a brand like Toyota Scion, it is easier to build a model that predicts marketing effectiveness if their target consumer shifts from Facebook & Instagram to a Snapchat. However, if the brand needs to establish a reliable path to react to the shift in industry towards preference for self-driving, electric cars owned by a 3rd party like an Uber, then models of ongoing marketing performance will not help.

To deal with such unknowns that brand needs a simulation framework that is built based on marketing models for the brand, as well other sources of exogenous information. These simulation engines provide robustness and future proofing to decision making.

Exhibit 6

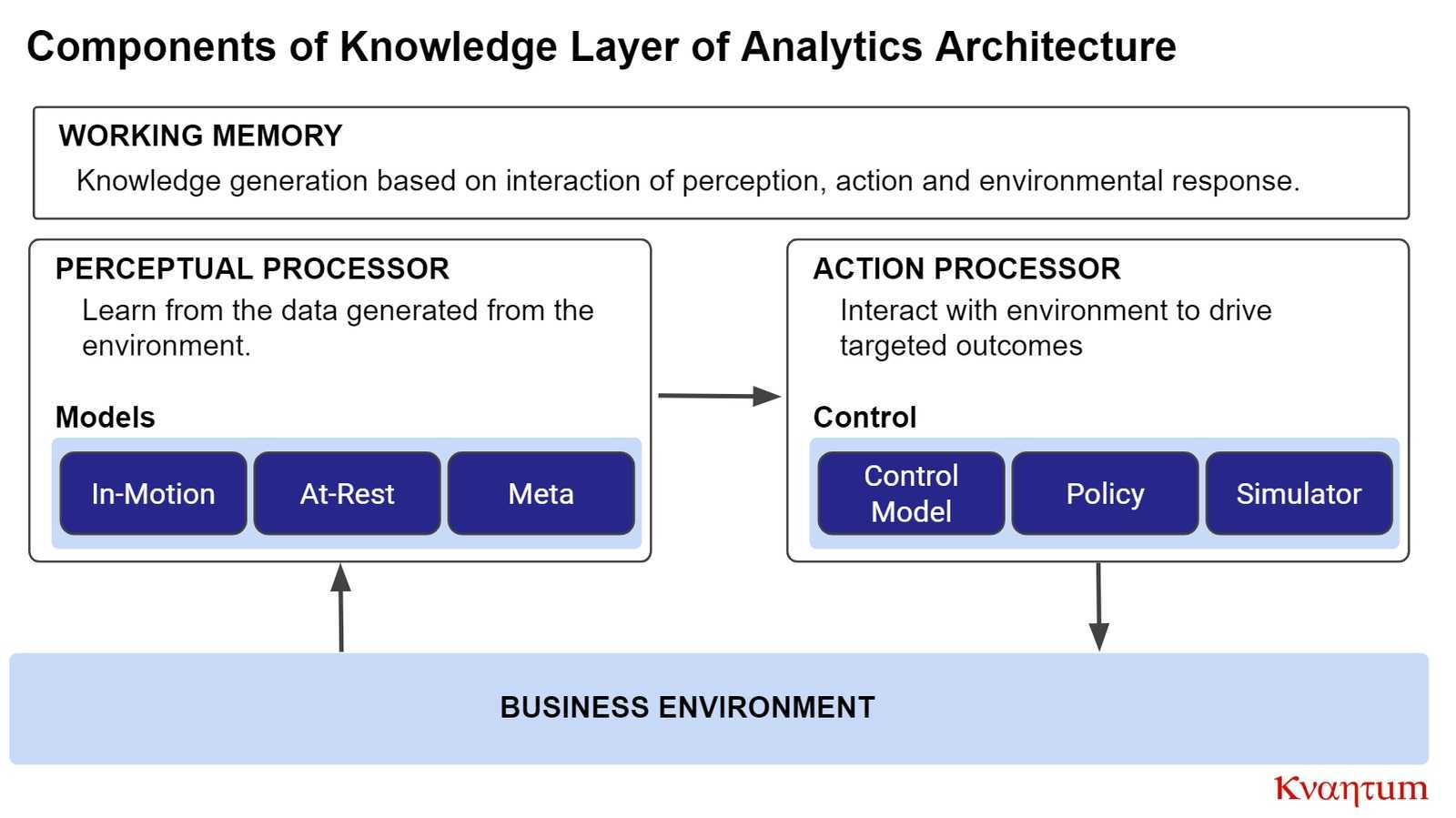

D. KNOWLEDGE LAYER

Managing knowledge is an important aspect of an organization’s competitive edge. Organizations that leverage knowledge better and collaborate on common knowledge can react & respond a lot faster to opportunities or challenges. There are many examples of organizations that have tried to put some serious effort, investment & publicity into showcasing role of AI organization wide knowledge management. Despite these efforts, knowledge management is quite archaic and primarily relies on just data, content and meta-information this data.

There is minimal association of the knowledge gathering algorithms with information gathered from the organization’s analytics models, and the feedback generated from applying these models in a real business environment. Harnessing knowledge is the most important aspect of the role of AI/machine learning/ statistics in an organization.

In our humble opinion nobody better establishes a framework for knowledge extraction that Simon Haykin, through his writings on Cognitive Dynamic Systems. We have adapted his generic framework to a more specific business analytics construct below.

Exhibit 7

Through this cognitive dynamic systems framework, the knowledge layer can be further explained through following components.

A. Perceptual Processor

The starting point of knowledge gathering is the set of models & methods being used to understand the environment that the brand operates in. Meta-Learning algorithms that interrogate these models, for common patterns in data streams, to help establish a recognition of environmental stimuli.

As an example, consider a diapers brand which monitors shipment patterns to its retail partners. Past models for this brand indicate that adverse weather events like hurricanes lead to the consumer hoarding diapers, and hence a large spike in sales in a very specific geographical area. There are two additional downstream issues that this creates - shifting of the purchase cycle because the consumer has probably stored more diapers than needed, and potentially loss of sales to private label because the local retailer was out of stock before it could address demand.

Today, almost all analytics teams would model this mechanism post-facto when their models are unable to explain these sales spikes. However, if a perceptual learner is in place, then the organization can algorithmically anticipate the likely shift in demand patterns before the next adverse event happens in a different location.

From an algorithmic perspective, environmental perception is supported by algorithms like orthogonal transforms, factor analysis, clustering, distribution learners(VAEs),generative algorithms, blind source separation, time-domain decomposition, etc. The purpose of all these algorithms is to understand the nature of data in context of the estimation models described earlier in this document.

B. Action Processor

Action processor essentially learns about the organization’s response to the marketing(&business) environment based on the the models & controls discussed above. This learner is effectively creating a knowledge base of the algorithmic/model outcomes & practical adherence of the organization’s actors to these outcomes.

As an example, let’s assume that the marketing attribution models recommend that a brand increases reach for a specific target audience segment to improve marketing effectiveness. Therefore, the brand’s agency actually attempts to buy more ad inventory based on recommendations, it turns out that they are unable to adhere to recommendation because the brand has already maxed out the ad inventory. The role of action processor in such a scenario is to learn that there is a real practical constraint on the level of inventory that can be bought for a certain type of targeting, and incorporate this into future recommendations.

C. Working Memory

The role of working memory is to be the bridge between perception processor and action processor. An organization’s analytics & executive setup interacts with its environment in two different ways.

- The analytics capability perceives the environment, understands the specifics of the environments and codifies this knowledge through models & recommended outcomes.

- The executive capability acts on this intelligence in the short term, and codifies long run policies associated with repeated actions and their effectiveness. The role of working memory is to retain this knowledge of perception and associated action, such that the organization can recognize environmental changes more quickly, and act on ther more quickly.

As an example, consider a beverage company like Coca Cola. The role of working memory in such a scenario is to automatically establish leading indicators associated with beverage category headwinds like obesity, nutrition and environmental waste. By using these learning indicators, the working memory can establish for each geography and each beverage, when it does not make sense to use marketing to counter headwinds, but rather adapt its products to embrace the headwinds. At this present moment, such decisions rely on intuition and/or multi-year consulting engagements, which are basically doing an expensive but a poor-man’s job of building a working memory.

PARTING THOUGHTS

Through this article we have tried to establish a framework for incorporating some of the latest AI & machine learning thinking into everyday organizational analytics. Given the abundance of data and cheap computing, it is amply clear that the next generation of leading brands & products would heavily rely on the“AI”or“Machine Learning” algorithms.

However, it is important to recognize that these algorithms do not have to be black boxes which prescribe actions. These algorithms are a mechanism for learning from massive amounts of information & data. These algorithms can also be evaluated & interrogated to answer specific business questions.

The role of these algorithms is to augment organizational expertise and not replace it. In that sense, organizational design is as important as the algorithmic design to successfully leverage this capability.

Over the past six years, as we have worked across a vast number of brands across geographies, we have had the front row seat to an emerging marketing analytics landscape. Our hope is that the analytics framework, shared in this article, would be helpful in getting you started on integrating AI into your analytics organization. Global trends affecting marketing today are evolving at pace; the marketing analytics function must elevate the focus on the corresponding opportunities and respond strongly. While this poses many questions around how to best redefine the organizational structure to capture value, it’s clear the marketing analytics function will have a much more strategic part to play. Is your marketing analytics function ready to take this? Send your questions to us.

About the author Harpreet Singh is CTO & co-founder at Kvantum. Currently, Harpreet’s primary area of interest & research is on establishing evolutionary, real time, simulation based algorithmic systems to track business performance data across all functions of an enterprise, and provide real time tracking & guidance.

2019 Kvantum, Inc.